I’m excited to share a new working paper co-authored with Burak Dalaman and Nathan Kettlewell. We study how large language models (LLMs) changes academic writing, with a simple idea that unlocks a clean comparison between native and non-native English speaking authors. While studying the impact of LLMs, we also happen to use LLMs to infer authors’ likely English language background from names. This lets us split abstracts into native-authored and non-native-authored groups and track differences over time.

- Data: ~1.25M arXiv abstracts by ~1.04M authors, 2018–2024.

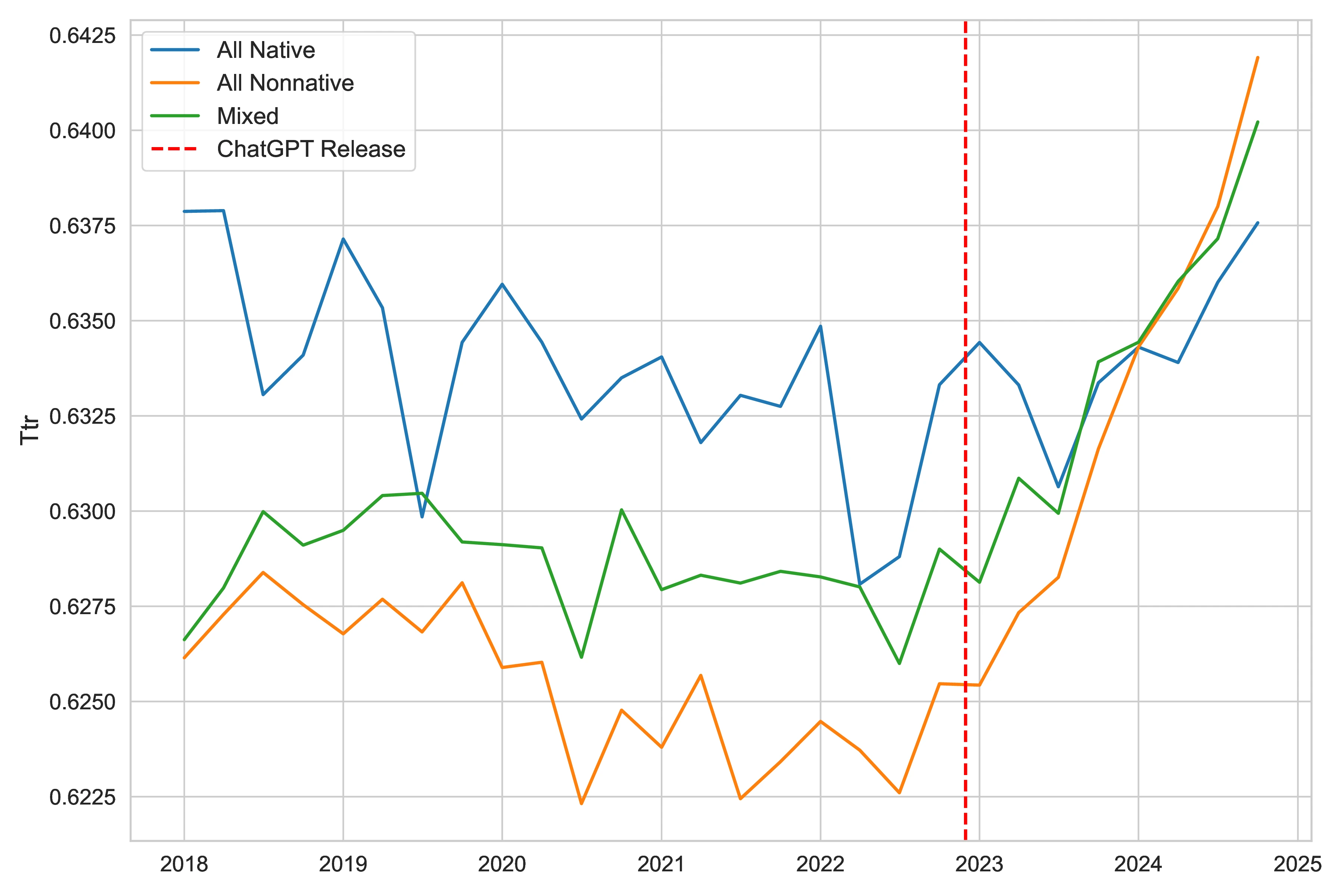

- Outcomes: lexical diversity (e.g., type-token ratio, TTR) and writing complexity (readability metrics).

- Shock window: the late-2022 release of ChatGPT.

What we find

- Bigger shifts for non-native authors. After late 2022, non-native-authored abstracts move more on both lexical diversity and writing complexity than native-authored ones.

- Gap closes. For example, The the lexical diversity (TTR) gap between groups narrows markedly through 2023–2024.

Example: TTR time series shows convergence in lexical diversity post-ChatGPT.

Example: TTR time series shows convergence in lexical diversity post-ChatGPT.

- Computer science leads. Changes are largest in CS categories. Our interpretation is: CS authors tend to adopt new AI tooling faster. If true, broader fields may show delayed effects as adoption diffuses.

- Adoption is slow. Even for a relatively easy use case (AI-assisted phrasing and editing) uptake among academics looks gradual, not instantaneous.

We will update the data and the reuslts soon. You can find the paper here. Let us know if you have any comments or questions: alifurkan.kalay@mq.edu.au

Cheers!